China plans strict AI guidelines to guard kids and deal with suicide dangers | EUROtoday

Osmond ChiaBusiness reporter

Getty Images

Getty ImagesChina has proposed strict new guidelines for synthetic intelligence (AI) to offer safeguards for kids and forestall chatbots from providing recommendation that would result in self-harm or violence.

Under the deliberate rules, builders may even want to make sure their AI fashions don’t generate content material that promotes playing.

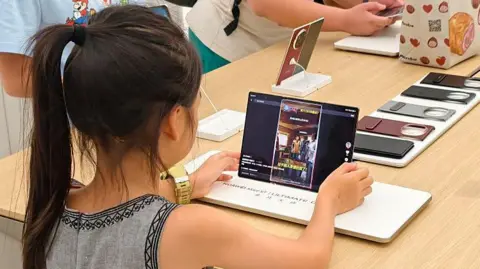

The announcement comes after a surge within the variety of chatbots being launched in China and around the globe.

Once finalised, the principles will apply to AI services in China, marking a significant transfer to manage the fast-growing expertise, which has come beneath intense scrutiny over security issues this 12 months.

The draft guidelines, which have been revealed on the weekend by the Cyberspace Administration of China (CAC), embody measures to guard kids. They embody requiring AI companies to supply personalised settings, have closing dates on utilization and getting consent from guardians earlier than offering emotional companionship companies.

Chatbot operators will need to have a human take over any dialog associated to suicide or self-harm and instantly notify the person’s guardian or an emergency contact, the administration mentioned.

AI suppliers should make sure that their companies don’t generate or share “content that endangers national security, damages national honour and interests [or] undermines national unity”, the assertion mentioned.

The CAC mentioned it encourages the adoption of AI, similar to to advertise native tradition and create instruments for companionship for the aged, offered that the expertise is secure and dependable. It additionally known as for suggestions from the general public.

Chinese AI agency DeepSeek made headlines worldwide this 12 months after it topped app obtain charts.

This month, two Chinese startups Z.ai and Minimax, which collectively have tens of thousands and thousands of customers, introduced plans to listing on the inventory market.

The expertise has rapidly gained big numbers of subscribers with some utilizing it for companionship or remedy.

The impression of AI on human behaviour has come beneath elevated scrutiny in latest months.

Sam Altman, the pinnacle of ChatGPT-maker OpenAI, mentioned this 12 months that the best way chatbots reply to conversations associated to self-harm is among the many firm’s most tough issues.

In August, a household in California sued OpenAI over the dying of their 16-year-old son, alleging that ChatGPT inspired him to take his personal life. The lawsuit marked the primary authorized motion accusing OpenAI of wrongful dying.

This month, the corporate marketed for a “head of preparedness” who can be chargeable for defending in opposition to dangers from AI fashions to human psychological well being and cybersecurity.

The profitable candidate can be chargeable for monitoring AI dangers that would pose a hurt to individuals. Mr Altman mentioned: “This will be a stressful job, and you’ll jump into the deep end pretty much immediately.”

If you’re struggling misery or despair and want assist, you can converse to a well being skilled, or an organisation that gives assist. Details of assist accessible in lots of nations will be discovered at Befrienders Worldwide: www.befrienders.org.

In the UK, a listing of organisations that may assist is obtainable at bbc.co.uk/actionline. Readers within the US and Canada can name the 988 suicide helpline or go to its web site.

https://www.bbc.com/news/articles/c8dydlmenvro?at_medium=RSS&at_campaign=rss