Roblox to ban younger youngsters from messaging others | EUROtoday

Getty Images

Getty ImagesRoblox has introduced it would block under-13s from messaging others on the net gaming platform as a part of new efforts to safeguard youngsters.

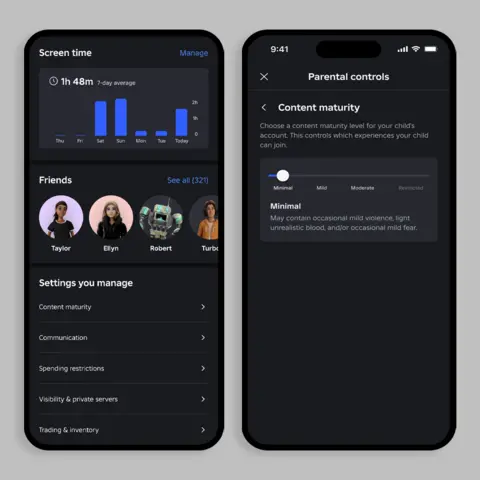

Child customers won’t be able to ship direct messages inside video games by default until a verified mum or dad or guardian provides them permission.

Parents will even have the ability to view and handle their kid’s account, together with seeing their record of on-line buddies, and setting each day limits on their play time.

Roblox is the most well-liked gaming platform for eight to 12 12 months olds within the UK, in accordance with Ofcom analysis, however it has been urged to make its experiences safer for youngsters.

The firm mentioned it could start rolling out the modifications from Monday, and they are going to be totally carried out by the top of March 2025.

It means younger youngsters will nonetheless have the ability to entry public conversations seen by everybody in video games – to allow them to nonetheless speak to their buddies – however can’t have non-public conversations with out parental consent.

Matt Kaufman, Roblox’s chief security officer, mentioned the sport is performed by 88 million individuals every day, and over 10% of its complete workers – equating to 1000’s of individuals – work on the platform’s security options.

“As our platform has grown in scale, we have always recognised that our approach to safety must evolve with it,” he mentioned.

Besides banning youngsters from sending direct messages (DMs) throughout the platform, it would give dad and mom extra methods to simply see and handle their kid’s exercise.

Roblox

RobloxParents and guardians should confirm their id and age with a type of government-issued ID or a bank card as a way to entry parental permissions for his or her little one, by way of their very own linked account.

But Mr Kaufman acknowledged id verification is a problem being confronted by plenty of tech corporations, and referred to as on dad and mom to verify a toddler has the right age on their account.

“Our goal is to keep all users safe, no matter what age they are,” he mentioned.

“We encourage parents to be working with their kids to create accounts and hopefully ensure that their kids are using their accurate age when they sign up.”

Richard Collard, affiliate head of coverage for little one security on-line at UK youngsters’s charity the NSPCC, referred to as the modifications “a optimistic step in the proper path”.

But he said they need to be supported by effective ways of checking and verifying user age in order to “translate into safer experiences for youngsters”.

“Roblox should make this a precedence to robustly deal with the hurt going down on their web site and shield younger youngsters,” he added.

Maturity guidlines

Roblox also announced it planned to simplify descriptions for content on the platform.

It is replacing age recommendations for certain games and experiences to “content material labels” that simply outline the nature of the game.

It said this meant parents could make decisions based on the maturity of their child, rather than their age.

These range from “minimal”, potentially including occasional mild violence or fear, to “restricted” – potentially containing more mature content such as strong violence, language or lots of realistic blood.

By default, Roblox users under the age of nine will only be able to access “minimal” or “delicate” experiences – but parents can allow them to play “average” games by giving consent.

But users cannot access “restricted” games until they are at least 17-years-old and have used the platform’s tools to verify their age.

It follows an announcement in November that Roblox would be barring under-13s from “social hangouts”where players can communicate with each other using text or voice messages, from Monday.

It also told developers that from 3 December, Roblox game creators would need to specify whether their games are suitable for children and block games for under-13s that do not provide this information.

The changes come as platforms accessed and used by children in the UK prepare to meet new rules around illegal and harmful material on their platforms under the Online Safety Act.

Ofcom, the UK watchdog enforcing the law, has warned that companies will face punishments if they fail to keep children safe on their platforms.

It will publish its codes of apply for corporations to abide by in December.

https://www.bbc.com/news/articles/c9wrqg4vd2qo